41个版本 (5个破坏性版本)

| 0.6.0 | 2024年6月22日 |

|---|---|

| 0.5.3 | 2024年6月8日 |

| 0.4.9 | 2024年6月2日 |

| 0.4.4 | 2024年2月4日 |

| 0.1.0 | 2024年1月2日 |

#191 在 HTTP服务器

每月下载量 2,475

2MB

1.5K SLoC

通过本地私有HTTP API以简单方式运行llama.cpp可执行文件,用于补全和嵌入。

隐私目标

- 服务器是无状态的

- 始终在本地主机上运行

- 从不写入日志

- 从不将提示信息放入控制台日志中

- MIT许可证,因此您可以随心所欲地根据您的特定需求修改此代码

此项目的目标是提供一个完全清晰和可见的本地运行服务器的方法。此代码的运行方式尽可能简单,以便您可以确切了解您正在运行的内容。

您可以通过从发行版获取所有操作系统的二进制文件来安装

或者如果您已安装rust

cargo install epistemology

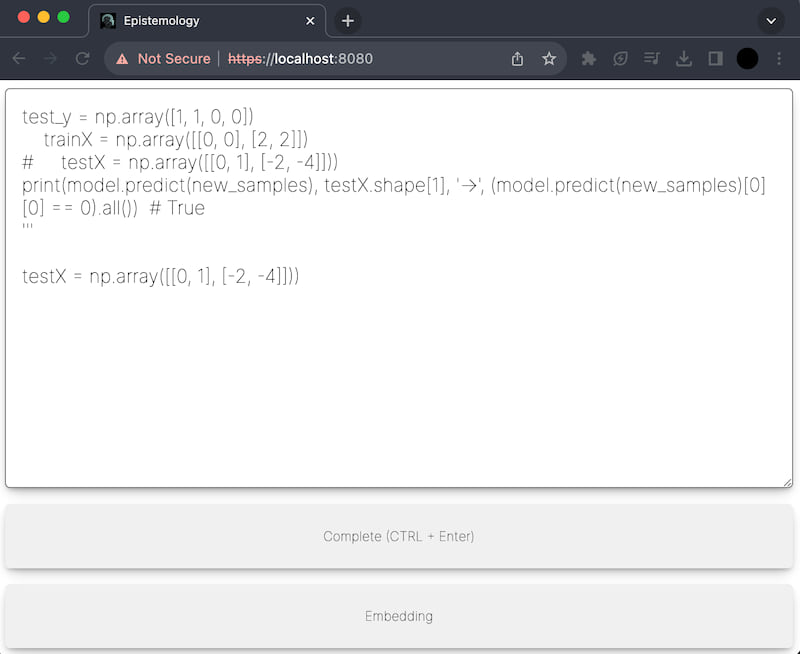

示例

epistemology -m ../llama.cpp/phi-2.Q2_K.gguf -e ../llama.cpp/main -d ../llama.cpp/embedding

Serving UI on https://:8080/ from built-in UI

Listening with GET and POST on https://:8080/api/completion

Examples:

* https://:8080/api/completion?prompt=famous%20qoute:

* curl -X POST -d "famous quote:" https://:8080/api/completion

* curl -X POST -d "robots are good" https://:8080/api/embedding

您还可以从静态路径运行自己的Web界面

epistemology -m ../llama.cpp/phi-2.Q2_K.gguf -e ../llama.cpp/main -d ../llama.cpp/embedding -u ./my-web-interface

Serving UI on https://:8080/ from ./my-web-interface

Listening with GET and POST on https://:8080/api/completion

Examples:

* https://:8080/api/completion?prompt=famous%20qoute:

* curl -X POST -d "famous quote:" https://:8080/api/completion

* curl -X POST -d "robots are good" https://:8080/api/embedding

您还可以使用*.gbnf文件来限制输出语法,例如JSON输出

epistemology -m ../llama.cpp/phi-2.Q2_K.gguf -e ../llama.cpp/main -d ../llama.cpp/embedding -g ./json.gbnf

Serving UI on https://:8080/ from built-in UI

Listening with GET and POST on https://:8080/completion

Examples:

* https://:8080/api/completion?prompt=famous%20qoute:

* curl -X POST -d "famous quote:" https://:8080/api/completion

* curl -X POST -d "robots are good" https://:8080/api/embedding

限制到JSON模式

将AI输出限制为结构化数据可以使其在程序性使用中更有用。此项目使用姐妹项目GBNF-rs来使用JSON模式文件作为语法。

假设您有一个名为"schema.json"的文件,其中包含JSON模式

{

"$schema": "https://schema.json.js.cn/draft/2020-12/schema",

"$id": "https://example.com/product.schema.json",

"title": "Product",

"description": "Famouse quote and person generator",

"type": "object",

"properties": {

"quote": {

"description": "A famous quote most people would know",

"type": "string"

},

"firstName": {

"description": "The authors's first name.",

"type": "string"

},

"lastName": {

"description": "The authors's last name.",

"type": "string"

},

"age": {

"description": "Age in years which must be equal to or greater than zero.",

"type": "number"

}

}

}

epistemology -m ../llama.cpp/phi-2.Q2_K.gguf -e ../llama.cpp/main -d ../llama.cpp/embedding -j ./my-schema.json

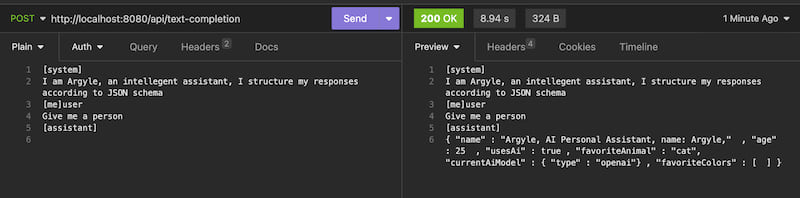

现在我们可以向AI提问,并得到符合我们JSON格式的答案。由于在转换为AI语法过程中会丢失大量元数据,因此我们应该在系统提示中重述我们想要引导生成的信息。

HTTP POST https://:8080/api/completion

[system]

I am Argyle, an intellegent assistant, I structure my responses according to JSON schema

{

"$schema": "https://schema.json.js.cn/draft/2020-12/schema",

"$id": "https://example.com/product.schema.json",

"title": "Product",

"description": "Famouse quote and person generator",

"type": "object",

"properties": {

"quote": {

"description": "A famous quote most people would know from the author's book",

"type": "string"

},

"firstName": {

"description": "The authors's first name.",

"type": "string"

},

"lastName": {

"description": "The authors's last name.",

"type": "string"

},

"age": {

"description": "Age in years which must be equal to or greater than zero.",

"type": "number"

}

}

}

[me]user

Generate me a famous quote?

[assistant]

输出

{

"quote" : "The sky above the port was the color of television, tuned to a dead channel.",

"firstName" : "William",

"lastNameName" : "William",

"age": 75.0

}

高级:如何使事物运行更快?

要调整的主要三个旋钮是卸载到GPU的层数、线程数和上下文大小

epistemology -m phi2.gguf -e ../llama.cpp/main.exe -l 35 -t 16 -c 50000

高级:为什么我会看到关于无效证书的错误?

认识论始终使用随机生成的HTTPS进行安全通信,默认情况下它会自动生成一个未注册在您机器上的证书。如果您想删除此消息,您将需要创建自己的证书并将其添加到您机器上批准的证书列表中。然后像这样运行认识论。

epistemology -m phi2.gguf -e ../llama.cpp/main.exe --http-key-file key.pem --http-cert-file cert.pem

高级:在Windows上使用AMD Radeon运行认识论,并具有特定的层计数

$env:GGML_OPENCL_PLATFORM = "AMD"

$env:GGML_OPENCL_DEVICE = "1" # you can change devices here

epistemology -m phi2.gguf -e ../llama.cpp/main.exe -n 40

依赖项

~26–39MB

~799K SLoC