3 个不稳定版本

使用旧的 Rust 2015

| 0.2.1 | 2017年8月20日 |

|---|---|

| 0.2.0 | 2017年8月20日 |

| 0.1.0 | 2017年7月14日 |

1777 在 算法 中排名

每月下载量 40 次

105KB

1.5K SLoC

revonet

Rust 语言实现的实码遗传算法,用于解决优化问题和训练神经网络。后者也称为神经进化。

特性

- 实码进化算法

- 固定结构的神经网络权重的神经进化调优

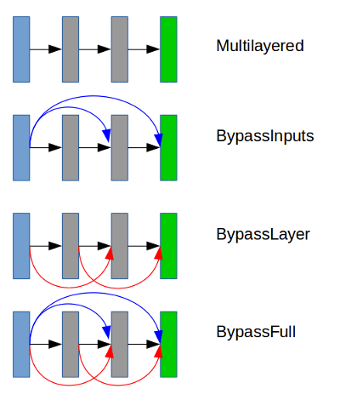

- 支持多种前馈架构

- 自动计算 EA 和 NE 的单次和多次运行统计信息

- 可以将 EA 设置和结果保存到 json

- 允许为 EA 和 NE 定义用户自定义的目标函数(请参阅以下示例)

示例

实码遗传算法

let pop_size = 20u32; // population size.

let problem_dim = 10u32; // number of optimization parameters.

let problem = RosenbrockProblem{}; // objective function.

let gen_count = 10u32; // generations number.

let settings = GASettings::new(pop_size, gen_count, problem_dim);

let mut ga: GA<RosenbrockProblem> = GA::new(settings, &problem); // init GA.

let res = ga.run(settings).expect("Error during GA run"); // run and fetch the results.

// get and print results of the current run.

println!("\n\nGA results: {:?}", res);

// make multiple runs and get combined results.

let res = ga.run_multiple(settings, 10 as u32).expect("Error during multiple GA runs");

println!("\n\nResults of multple GA runs: {:?}", res);

运行神经网络权重的进化来解决回归问题

let (pop_size, gen_count, param_count) = (20, 20, 100); // gene_count does not matter here as NN structure is defined by a problem.

let settings = EASettings::new(pop_size, gen_count, param_count);

let problem = SymbolicRegressionProblem::new_f();

let mut ne: NE<SymbolicRegressionProblem> = NE::new(&problem);

let res = ne.run(settings).expect("Error: NE result is empty");

println!("result: {:?}", res);

println!("\nbest individual: {:?}", res.best);

创建具有 2 个隐藏层、使用 sigmoid 激活和具有线性输出节点的多层神经网络。

const INPUT_SIZE: usize = 20;

const OUTPUT_SIZE: usize = 2;

let mut rng = rand::thread_rng(); // needed for weights initialization when NN is built.

let mut net: MultilayeredNetwork = MultilayeredNetwork::new(INPUT_SIZE, OUTPUT_SIZE);

net.add_hidden_layer(30 as usize, ActivationFunctionType::Sigmoid)

.add_hidden_layer(20 as usize, ActivationFunctionType::Sigmoid)

.build(&mut rng, NeuralArchitecture::Multilayered); // `build` finishes creation of neural network.

let (ws, bs) = net.get_weights(); // `ws` and `bs` are `Vec` arrays containing weights and biases for each layer.

assert!(ws.len() == 3); // number of elements equals to number of hidden layers + 1 output layer

assert!(bs.len() == 3); // number of elements equals to number of hidden layers + 1 output layer

为 GA 创建自定义优化问题

// Dummy problem returning random fitness.

pub struct DummyProblem;

impl Problem for DummyProblem {

// Function to evaluate a specific individual.

fn compute<T: Individual>(&self, ind: &mut T) -> f32 {

// use `to_vec` to get real-coded representation of an individual.

let v = ind.to_vec().unwrap();

let mut rng: StdRng = StdRng::from_seed(&[0]);

rng.gen::<f32>()

}

}

为 NN 进化创建自定义问题

// Dummy problem returning random fitness.

struct RandomNEProblem {}

impl RandomNEProblem {

fn new() -> RandomNEProblem {

RandomNEProblem{}

}

}

impl NeuroProblem for RandomNEProblem {

// return number of NN inputs.

fn get_inputs_num(&self) -> usize {1}

// return number of NN outputs.

fn get_outputs_num(&self) -> usize {1}

// return NN with random weights and a fixed structure. For now the structure should be the same all the time to make sure that crossover is possible. Likely to change in the future.

fn get_default_net(&self) -> MultilayeredNetwork {

let mut rng = rand::thread_rng();

let mut net: MultilayeredNetwork = MultilayeredNetwork::new(self.get_inputs_num(), self.get_outputs_num());

net.add_hidden_layer(5 as usize, ActivationFunctionType::Sigmoid)

.build(&mut rng, NeuralArchitecture::Multilayered);

net

}

// Function to evaluate performance of a given NN.

fn compute_with_net<T: NeuralNetwork>(&self, nn: &mut T) -> f32 {

let mut rng: StdRng = StdRng::from_seed(&[0]);

let mut input = (0..self.get_inputs_num())

.map(|_| rng.gen::<f32>())

.collect::<Vec<f32>>();

// compute NN output using random input.

let mut output = nn.compute(&input);

output[0]

}

}

依赖关系

~1–2MB

~41K SLoC